-

Open VLM Leaderboard

🌎952VLMEvalKit Evaluation Results Collection

-

Open VLM Video Leaderboard

🌎129VLMEvalKit Eval Results in video understanding benchmark

-

Open LMM Reasoning Leaderboard

🥇44A Leaderboard that demonstrates LMM reasoning capabilities

-

MMBench Leaderboard

🚀24Explore MMBench Leaderboard data

AI & ML interests

None defined yet.

Recent Activity

Papers

CompassVerifier: A Unified and Robust Verifier for LLMs Evaluation and Outcome Reward

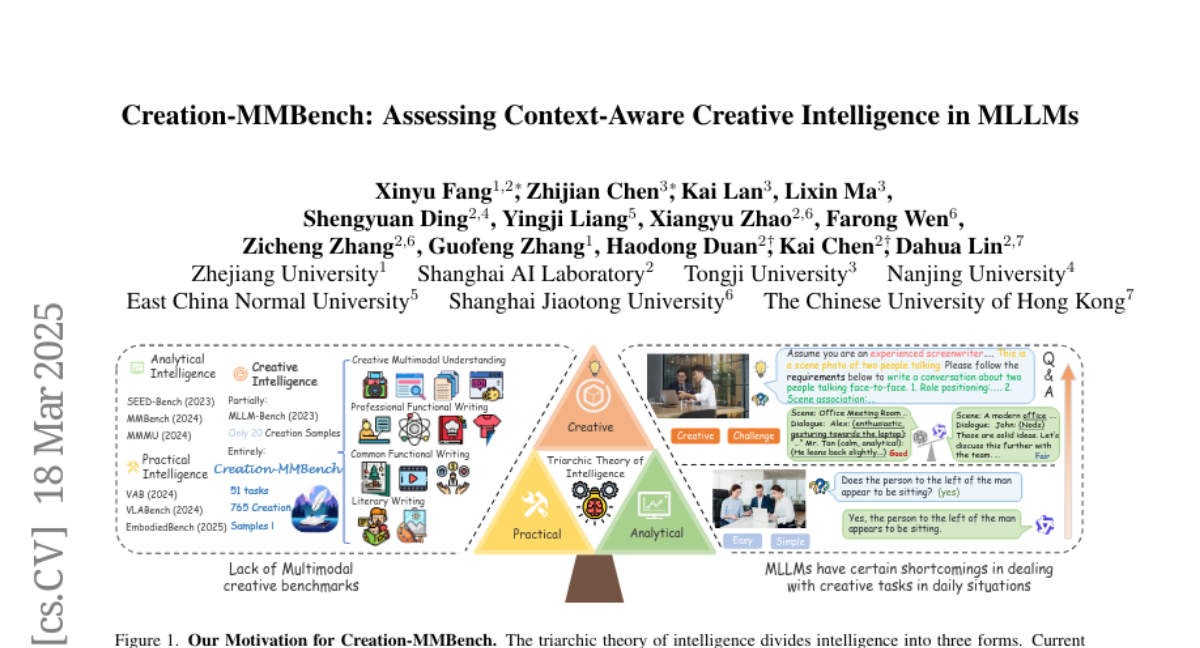

Creation-MMBench: Assessing Context-Aware Creative Intelligence in MLLM

👋 join us on Discord and WeChat

follow us on Github

OpenCompass is a platform focused on evaluation of AGI, include Large Language Model and Multi-modality Model. We aim to:

- develop high-quality libraries to reduce the difficulties in evaluation

- provide convincing leaderboards for improving the understanding of the large models

- create powerful toolchains targeting a variety of abilities and tasks

- build solid benchmarks to support the large model research

-

Open VLM Leaderboard

🌎952VLMEvalKit Evaluation Results Collection

-

Open VLM Video Leaderboard

🌎129VLMEvalKit Eval Results in video understanding benchmark

-

Open LMM Reasoning Leaderboard

🥇44A Leaderboard that demonstrates LMM reasoning capabilities

-

MMBench Leaderboard

🚀24Explore MMBench Leaderboard data

spaces

18

ATLAS Benchmark

ATLAS for Frontier Scientific Benchmark

RISEBench Gallery

A Gallery of Generation Results on RISEBench

Open LMM Spatial Leaderboard

A Leaderboard for LMM spatial understanding capabilities

Open LMM Subjective Leaderboard

VLMEvalKit Subjectivce Benchmark Results

CompassAcademic Leaderboard Full Version

Compass Academic Leaderboard Full Version

Open LMM Reasoning Leaderboard

A Leaderboard that demonstrates LMM reasoning capabilities